Welcome!

We believe that expected value is one of the most important concepts for making better decisions in an uncertain world, and understanding expected value helps us think more clearly about risks, rewards, and tradeoffs.

Nate Silver in On the Edge :

Society would be generally better off–I’ll confidently contend–if people understood the nature of expected value and specifically the importance of low-probability, high-impact events, whether they come in the form of fantastic potential payoffs or catastrophic risks.

What is expected value?

Expected value (EV) is the average of all possible outcomes. It’s computed by adding each possible outcome weighted by its probability of occurring.

\text{Expected Value} = \text{(Probability of each outcome)} \cdot \text{(Value of each outcome)}

In math terms:

\mathbb{E}[X] = \sum\limits_{i} P(X=x_i) \cdot x_i

EV: Rolling a die

What’s the expected value of rolling a single die?

There are 6 faces (1, 2, 3, 4, 5, 6) and each has probability of 1/6.

\begin{align*}

\mathbb{E}[\text{Single Die Roll}] &= \frac{1}{6}(1) + \frac{1}{6}(2) + \frac{1}{6}(3) + \frac{1}{6}(4) + \frac{1}{6}(5) + \frac{1}{6}(6) \\

&= \frac{1}{6} + \frac{2}{6} + \frac{3}{6} + \frac{4}{6} + \frac{5}{6} + \frac{6}{6} = \frac{21}{6} = \frac{7}{2} \\

&= 3.5

\end{align*}

The expected value is 3.5 .

EV: Ice cream coin flip

Suppose that you are buying an ice cream. The store is selling an ice cream cone for $7 .

They also have a special offer:

Flip a coin

If the coin is heads , you buy the ice cream for $10

If the coin is tails , you get the ice cream for free

// Create SVG visualization of coin flip outcomes - static version html ` <svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 400 180"> <defs> <filter id="coinShadow" x="-20%" y="-20%" width="140%" height="140%"> <feGaussianBlur in="SourceAlpha" stdDeviation="2"/> <feOffset dx="2" dy="2" result="offsetblur"/> <feComponentTransfer> <feFuncA type="linear" slope="0.2"/> </feComponentTransfer> <feMerge> <feMergeNode/> <feMergeNode in="SourceGraphic"/> </feMerge> </filter> <linearGradient id="coinFaceGradient" x1="0%" y1="0%" x2="100%" y2="100%"> <stop offset="0%" style="stop-color:#ffd700;stop-opacity:1"/> <stop offset="100%" style="stop-color:#daa520;stop-opacity:1"/> </linearGradient> </defs> <!-- Probabilities --> <g transform="translate(0,25)"> <text x="140" y="0" font-family="Arial" font-size="14" text-anchor="middle" fill="#555555">P = 1/2</text> <text x="260" y="0" font-family="Arial" font-size="14" text-anchor="middle" fill="#555555">P = 1/2</text> </g> <!-- Coins --> <g transform="translate(80,35)"> <!-- Heads --> <g filter="url(#coinShadow)"> <circle cx="60" cy="45" r="40" fill="url(#coinFaceGradient)" stroke="#DAA520" stroke-width="2"/> <text x="60" y="52" font-family="Arial" font-weight="bold" font-size="16" text-anchor="middle" fill="#8B4513">HEADS</text> <text x="60" y="70" font-family="Arial" font-weight="bold" font-size="14" text-anchor="middle" fill="#8B4513">$10</text> </g> <!-- Stats for Heads --> <text x="60" y="115" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333">Count: ${ getCoinCount ("heads" )} </text> <text x="60" y="130" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333"> ${ getCoinPercent ("heads" )} </text> </g> <g transform="translate(200,35)"> <!-- Tails --> <g filter="url(#coinShadow)"> <circle cx="60" cy="45" r="40" fill="url(#coinFaceGradient)" stroke="#DAA520" stroke-width="2"/> <text x="60" y="52" font-family="Arial" font-weight="bold" font-size="16" text-anchor="middle" fill="#8B4513">TAILS</text> <text x="60" y="70" font-family="Arial" font-weight="bold" font-size="14" text-anchor="middle" fill="#8B4513">$0</text> </g> <!-- Stats for Tails --> <text x="60" y="115" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333">Count: ${ getCoinCount ("tails" )} </text> <text x="60" y="130" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333"> ${ getCoinPercent ("tails" )} </text> </g> </svg> `

What is the expected value of the coin flip?

What is the probability of each outcome? Heads and tails are both 50% likely.

What is the value of each outcome? The cost for heads is $10 and the cost for tails is $0.

The expected value of the coin flip is as follows:

\begin{align*}

\text{EV} &= P(\text{Heads}) \cdot V(\text{Heads}) + P(\text{Tails}) \cdot V(\text{Tails}) \\

&= (0.5) \cdot (\$10) + (0.5) \cdot (\$0) \\

&= \$5

\end{align*}

This means that the expected value of the coin flip is $5 , a better deal than the standard price of $7 .

= [result : "heads" , cost : 10 }, result : "tails" , cost : 0 }= 5 = 7 // Create buttons for coin flips = Inputs. button ("Flip 1 Coin" , {style : ` background: #1a1a1a; color: white; border: none; padding: 8px 16px; border-radius: 6px; cursor: pointer; ` = Inputs. button ("Flip 10 Coins" , {style : ` background: #1a1a1a; color: white; border: none; padding: 8px 16px; border-radius: 6px; cursor: pointer; ` = Inputs. button ("Flip 100 Coins" , {style : ` background: #1a1a1a; color: white; border: none; padding: 8px 16px; border-radius: 6px; cursor: pointer; ` // State management for coin flips = {let currentValues = window . _coinFlipValues || []; // Track previous button states const prevStates = window . _prevCoinStates || {, , ; // Detect which button was clicked let action = null ; if (coinFlipOne !== prevStates. coinFlipOne ) {= "flipOne" ; else if (coinFlipTen !== prevStates. coinFlipTen ) {= "flipTen" ; else if (coinFlipHundred !== prevStates. coinFlipHundred ) {= "flipHundred" ; // Update previous states window . _prevCoinStates = {, , ; // Handle flips based on action if (action === "flipOne" ) {. push (coinOutcomes[Math . floor (Math . random () * 2 )]); else if (action === "flipTen" ) {for (let i = 0 ; i < 10 ; i++ ) {. push (coinOutcomes[Math . floor (Math . random () * 2 )]); else if (action === "flipHundred" ) {for (let i = 0 ; i < 100 ; i++ ) {. push (coinOutcomes[Math . floor (Math . random () * 2 )]); window . _coinFlipValues = currentValues; return currentValues; // Calculate running averages for coins = coinResults. map ((_, i) => ({x : i + 1 , y : coinResults. slice (0 , i + 1 ). reduce ((a, b) => a + b. cost , 0 ) / (i + 1 )// Compute counts for each coin outcome = coinResults. reduce ((acc, flip) => {. result ] = (acc[flip. result ] || 0 ) + 1 ; return acc; , {})// Coin stats = coinResults. length = coinAverages. length > 0 ? . length - 1 ]. y . toFixed (2 ) : "N/A" // Helper functions for coins = (result) => {if (totalCoinFlips === 0 ) return "0%" ; const p = (coinCounts[result] || 0 ) / totalCoinFlips * 100 ; return p. toFixed (1 ) + "%" ; = (result) => coinCounts[result] || 0 // Coin flip buttons container = html ` <div style="display: flex; gap: 10px; align-items: center; margin-bottom: 20px;"> ${ viewof coinFlipOne} ${ viewof coinFlipTen} ${ viewof coinFlipHundred} </div> ` // Coin flip chart container = {const chart = Plot. plot ({width : 800 , height : 400 , margin : 50 , grid : true , style : {fontSize : 12 , fontFamily : "system-ui, -apple-system" , y : {domain : [0 , 12 ], label : "Average Cost ($)" , grid : true , x : {label : "Number of Flips" , grid : true , marks : [. line (coinAverages, {x : "x" , y : "y" , stroke : "#2196f3" , strokeWidth : 1.5 , . ruleY ([coinEV], {stroke : "red" , strokeDasharray : "5,5" , strokeOpacity : 0.5 , . ruleY ([regularPrice], {stroke : "#666" , strokeDasharray : "5,5" , strokeOpacity : 0.3 , . text ([{x : coinAverages. length , y : coinEV}], {text : ["Expected Value ($5)" ], dx : 10 , fill : "red" , fontSize : 10 , . text ([{x : coinAverages. length , y : regularPrice}], {text : ["Regular Price ($7)" ], dx : 10 , fill : "#666" , fontSize : 10 ; const container = html `<div style="position: relative; width: fit-content;">` ; . appendChild (chart); // Info box const infoBox = html `<div style=" position: absolute; top: 10px; right: 50px; background: rgba(255,255,255,0.9); padding: 4px 6px; border-radius: 3px; font-size: 10px; font-family: system-ui, -apple-system; border: 1px solid #ccc;"> <div><strong>Total flips:</strong> ${ totalCoinFlips} </div> <div><strong>Average cost:</strong> $ ${ currentCoinAverage} </div> </div>` ; . appendChild (infoBox); return container; // Display everything html ` <div style="display: flex; flex-direction: column; gap: 20px;"> ${ coinButtonsContainer} ${ coinChartContainer} </div> `

The EV calculation helps make decisions by providing a framework to evaluate options with uncertain outcomes. In most cases, the better expected value means that that is the better decision, and we should therefore take the coin flip option .

But not always. There are other considerations like risk tolerance since some prefer a guaranteed price of $7 to chancing a price of $10.

EV: Drawing a card

Here’s a simple card game where you get to draw 1 card and get the prize indicated on that card.

import {Inputs} from "@observablehq/inputs" import {Plot} from "@observablehq/plot" // Define distinct cards = [value : 1 , id : "1a" }, value : 1 , id : "1b" }, value : 2 , id : "2" }, value : 3 , id : "3" }// Expected value = 1.75 // Create SVG cards with dynamically updating stats = html ` <svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 400 180"> <!-- Definitions for reusable elements --> <defs> <!-- Card shadow --> <filter id="shadow" x="-20%" y="-20%" width="140%" height="140%"> <feGaussianBlur in="SourceAlpha" stdDeviation="2"/> <feOffset dx="2" dy="2" result="offsetblur"/> <feComponentTransfer> <feFuncA type="linear" slope="0.2"/> </feComponentTransfer> <feMerge> <feMergeNode/> <feMergeNode in="SourceGraphic"/> </feMerge> </filter> <!-- Card gradient --> <linearGradient id="cardGradient" x1="0%" y1="0%" x2="100%" y2="100%"> <stop offset="0%" style="stop-color:#ffffff;stop-opacity:1"/> <stop offset="100%" style="stop-color:#f8f9fa;stop-opacity:1"/> </linearGradient> <!-- Pattern for card decoration --> <pattern id="cardPattern" x="0" y="0" width="10" height="10" patternUnits="userSpaceOnUse"> <circle cx="5" cy="5" r="0.5" fill="#e0e0e0"/> </pattern> </defs> <!-- Probabilities --> <g transform="translate(0,25)"> <text x="80" y="0" font-family="Arial" font-size="14" text-anchor="middle" fill="#555555">P = 1/4</text> <text x="160" y="0" font-family="Arial" font-size="14" text-anchor="middle" fill="#555555">P = 1/4</text> <text x="240" y="0" font-family="Arial" font-size="14" text-anchor="middle" fill="#555555">P = 1/4</text> <text x="320" y="0" font-family="Arial" font-size="14" text-anchor="middle" fill="#555555">P = 1/4</text> </g> <!-- Cards --> <g transform="translate(50,35)"> <!-- Card 1a --> <g filter="url(#shadow)"> <rect x="0" y="0" width="60" height="90" rx="8" fill="url(#cardGradient)" stroke="#2196f3" stroke-width="2"/> <rect x="5" y="5" width="50" height="80" rx="6" fill="url(#cardPattern)" fill-opacity="0.1"/> <text x="30" y="55" font-family="Arial" font-weight="bold" font-size="28" text-anchor="middle" fill="#e74c3c">1</text> </g> <!-- Stats for Card 1a --> <text x="30" y="115" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333">Count: ${ count ("1a" )} </text> <text x="30" y="130" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333"> ${ percent ("1a" )} </text> </g> <g transform="translate(130,35)"> <!-- Card 1b --> <g filter="url(#shadow)"> <rect x="0" y="0" width="60" height="90" rx="8" fill="url(#cardGradient)" stroke="#2196f3" stroke-width="2"/> <rect x="5" y="5" width="50" height="80" rx="6" fill="url(#cardPattern)" fill-opacity="0.1"/> <text x="30" y="55" font-family="Arial" font-weight="bold" font-size="28" text-anchor="middle" fill="#e74c3c">1</text> </g> <!-- Stats for Card 1b --> <text x="30" y="115" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333">Count: ${ count ("1b" )} </text> <text x="30" y="130" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333"> ${ percent ("1b" )} </text> </g> <g transform="translate(210,35)"> <!-- Card 2 --> <g filter="url(#shadow)"> <rect x="0" y="0" width="60" height="90" rx="8" fill="url(#cardGradient)" stroke="#2196f3" stroke-width="2"/> <rect x="5" y="5" width="50" height="80" rx="6" fill="url(#cardPattern)" fill-opacity="0.1"/> <text x="30" y="55" font-family="Arial" font-weight="bold" font-size="28" text-anchor="middle" fill="#e74c3c">2</text> </g> <!-- Stats for Card 2 --> <text x="30" y="115" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333">Count: ${ count ("2" )} </text> <text x="30" y="130" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333"> ${ percent ("2" )} </text> </g> <g transform="translate(290,35)"> <!-- Card 3 --> <g filter="url(#shadow)"> <rect x="0" y="0" width="60" height="90" rx="8" fill="url(#cardGradient)" stroke="#2196f3" stroke-width="2"/> <rect x="5" y="5" width="50" height="80" rx="6" fill="url(#cardPattern)" fill-opacity="0.1"/> <text x="30" y="55" font-family="Arial" font-weight="bold" font-size="28" text-anchor="middle" fill="#e74c3c">3</text> </g> <!-- Stats for Card 3 --> <text x="30" y="115" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333">Count: ${ count ("3" )} </text> <text x="30" y="130" font-family="Arial" font-size="12" text-anchor="middle" fill="#333333"> ${ percent ("3" )} </text> </g> </svg> `

Each card is equally likely to be selected with probability P=1/4 . The probabilities (weights) always sum to 1 because they describe all possible outcomes.

Let’s compute the EV:

\begin{align*}

\mathbb{E}[\text{Draw Card}] &= \frac{1}{4}(1) + \frac{1}{4}(1) + \frac{1}{4}(2) + \frac{1}{4}(3) \\

&= \frac{1}{4} + \frac{1}{4} + \frac{2}{4} + \frac{3}{4} \\

&= \frac{7}{4} \\

&= 1.75

\end{align*}

Therefore the expected value, or average outcome, when drawing a card is 1.75 .

Note that EV is not a possible outcome (we couldn’t actually draw 1.75), but rather summarizes the set of possible outcomes.

= Inputs. button ("Draw 1 Card" , {style : ` background: #1a1a1a; color: white; border: none; padding: 8px 16px; border-radius: 6px; cursor: pointer; ` = Inputs. button ("Draw 10 Cards" , {style : ` background: #1a1a1a; color: white; border: none; padding: 8px 16px; border-radius: 6px; cursor: pointer; ` = Inputs. button ("Draw 100 Cards" , {style : ` background: #1a1a1a; color: white; border: none; padding: 8px 16px; border-radius: 6px; cursor: pointer; ` // Button container = html ` <div style="display: flex; gap: 10px; align-items: center; margin-bottom: 20px;"> ${ viewof drawOne} ${ viewof drawTen} ${ viewof drawHundred} </div> ` // State management for drawn cards = {let currentValues = window . _cardValues || []; // Track previous button states to detect changes const prevStates = window . _prevButtonStates || {drawOne : drawOne, drawTen : drawTen, drawHundred : drawHundred; // Detect which button was clicked by comparing with previous state let action = null ; if (drawOne !== prevStates. drawOne ) {= "drawOne" ; else if (drawTen !== prevStates. drawTen ) {= "drawTen" ; else if (drawHundred !== prevStates. drawHundred ) {= "drawHundred" ; // Update previous states window . _prevButtonStates = {, , ; // Handle drawing based on action if (action === "drawOne" ) {. push (cards[Math . floor (Math . random () * cards. length )]); else if (action === "drawTen" ) {for (let i = 0 ; i < 10 ; i++ ) {. push (cards[Math . floor (Math . random () * cards. length )]); else if (action === "drawHundred" ) {for (let i = 0 ; i < 100 ; i++ ) {. push (cards[Math . floor (Math . random () * cards. length )]); window . _cardValues = currentValues; return currentValues; // Calculate running averages = values. map ((_, i) => ({x : i + 1 , y : values. slice (0 , i + 1 ). reduce ((a, b) => a + b. value , 0 ) / (i + 1 )// Compute counts for each card type = values. reduce ((acc, card) => {. id ] = (acc[card. id ] || 0 ) + 1 ; return acc; , {})// Stats = values. length = runningAverages. length > 0 ? . length - 1 ]. y . toFixed (3 ) : "N/A" // Helper functions = (id) => {if (totalDraws === 0 ) return "0%" ; const p = (cardCounts[id] || 0 ) / totalDraws * 100 ; return p. toFixed (1 ) + "%" ; = (id) => cardCounts[id] || 0 // Chart container = {const chart = Plot. plot ({width : 800 , height : 400 , margin : 50 , grid : true , style : {fontSize : 12 , fontFamily : "system-ui, -apple-system" , y : {domain : [0 , 4 ], label : "Running Average" , grid : true , x : {label : "Number of Draws" , grid : true , marks : [. line (runningAverages, {x : "x" , y : "y" , stroke : "#2196f3" , strokeWidth : 1.5 , . ruleY ([expectedValue], {stroke : "red" , strokeDasharray : "5,5" , strokeOpacity : 0.5 , . text ([{x : runningAverages. length , y : expectedValue}], {text : ["Expected Value (1.75)" ], dx : 10 , fill : "red" , fontSize : 10 ; const container = html `<div style="position: relative; width: fit-content;">` ; . appendChild (chart); // Info box const infoBox = html `<div style=" position: absolute; top: 10px; right: 50px; background: rgba(255,255,255,0.9); padding: 4px 6px; border-radius: 3px; font-size: 10px; font-family: system-ui, -apple-system; border: 1px solid #ccc;"> <div><strong>Total draws:</strong> ${ totalDraws} </div> <div><strong>Current average:</strong> ${ currentAverage} </div> </div>` ; . appendChild (infoBox); return container; // Display everything html ` <div style="display: flex; flex-direction: column; gap: 20px;"> ${ buttonsContainer} ${ chartContainer} </div> `

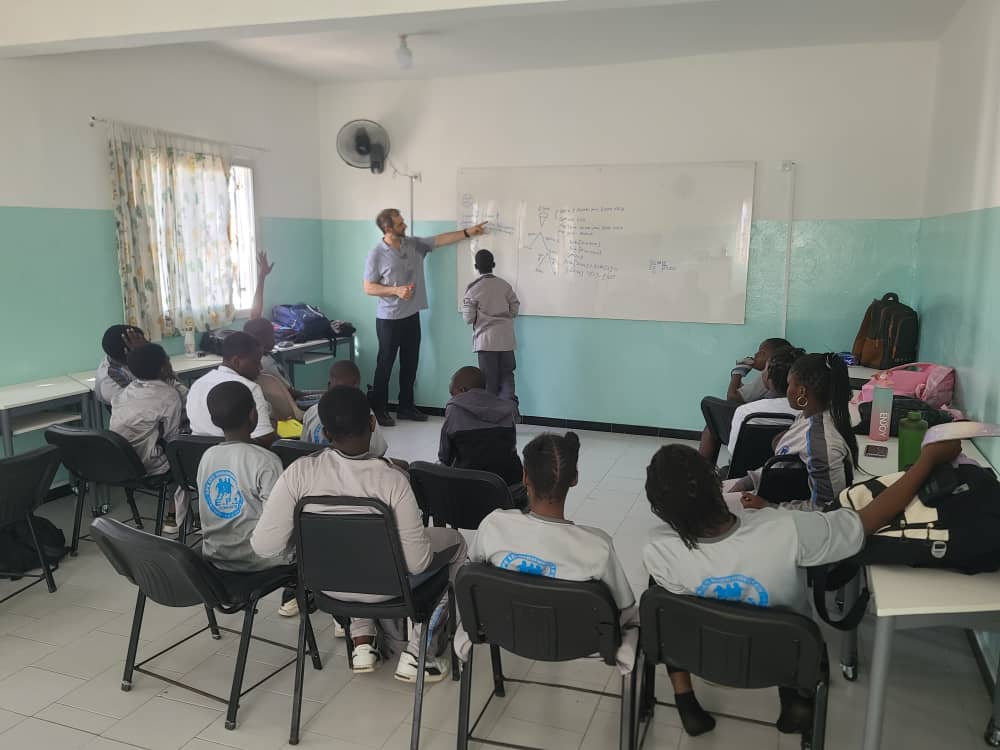

What we do

EV Tutorial (this website) : Learn about expected value through our tutorial and interactive examplesEV Workshops : In-person workshops on expected valueEV Day : A day of EV-centric and adjacent workshops, along with a charity poker tournamentPoker Camp : Workshops and courses on AI and applied rationality through the lens of pokerEV Fellowship : Contribute your strengths to the EVF in areas like research, coding, writing, logistics, running workshops, and creating videos